Errata

ERROR in CASE-62

Originally, I had listed Sotomayor as both the Author and as Joining in this decision. The Raw Data Files (pickle, json, txt) have been corrected. But I have not rerun (and will not be rerunning) the programs which created the graphs on the previous pages.

It's not a major error. And I question whether it effects a single line of Analysed Output. But it is an error. And I told you there would be errors. So, I was right in being wrong.

And now, onto the main event.

Code

2018_judges_analyze_A5.txt is the code I used to create the graphs on this page (using the data as discussed elsewhere). Technically, there's not much new. From a programming standpoint, it's a simple continuation of a theme.

Comparative Analysis

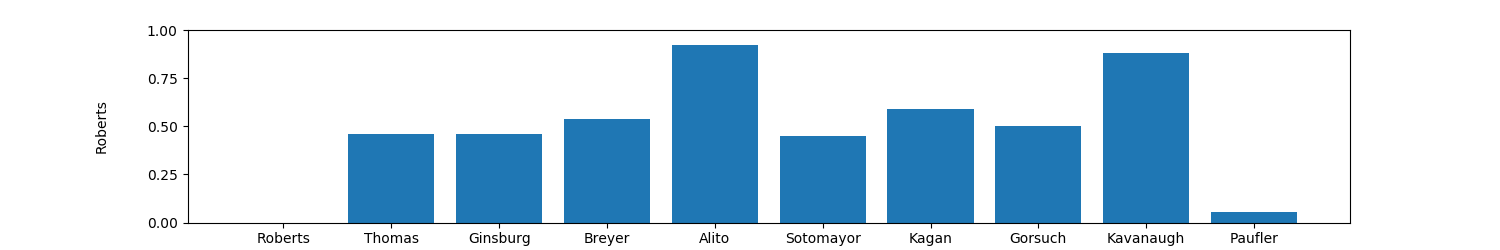

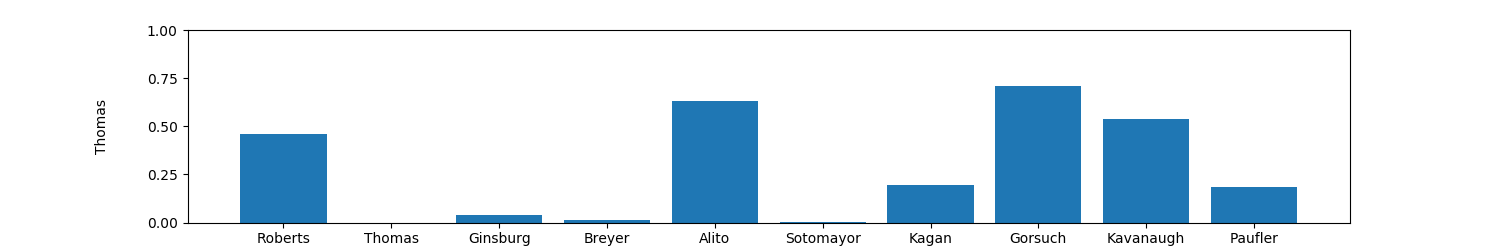

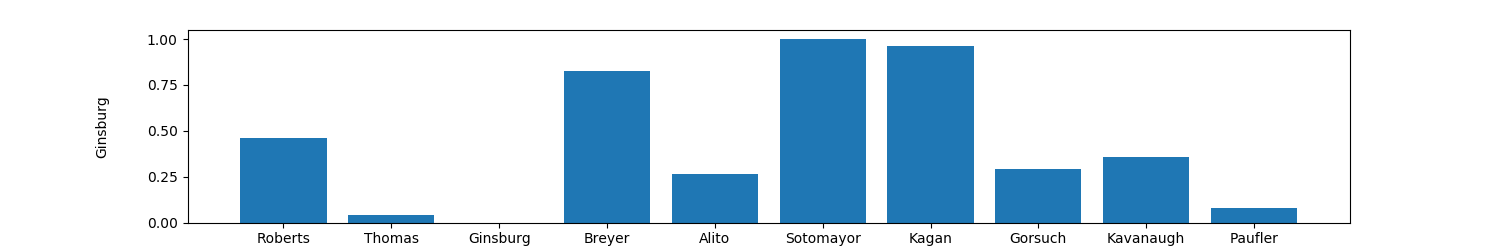

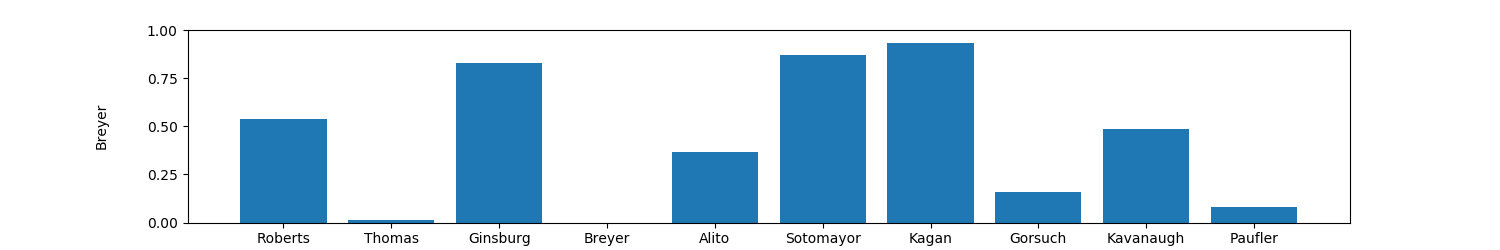

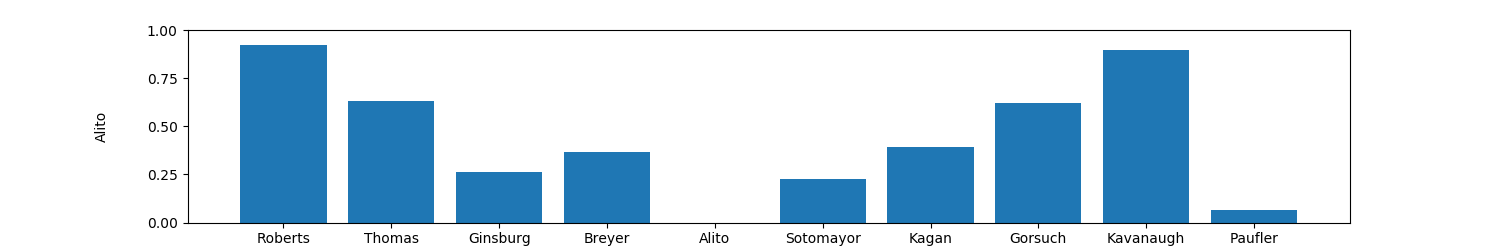

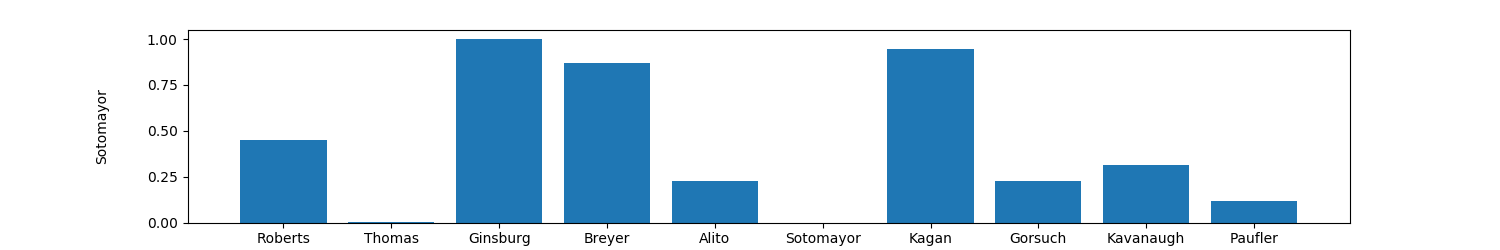

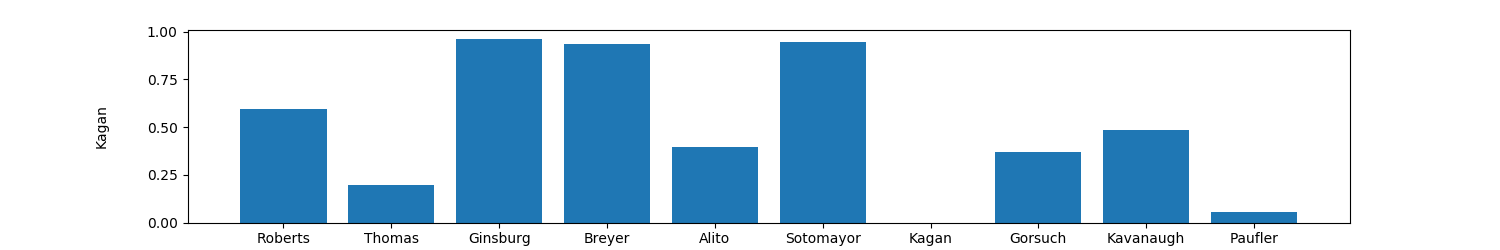

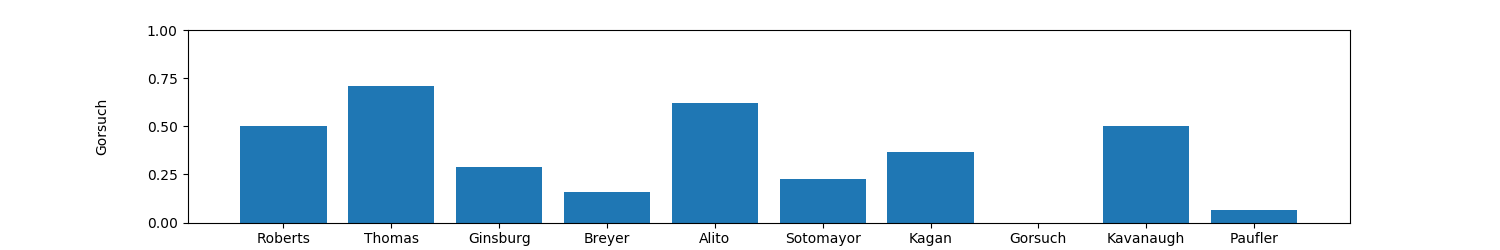

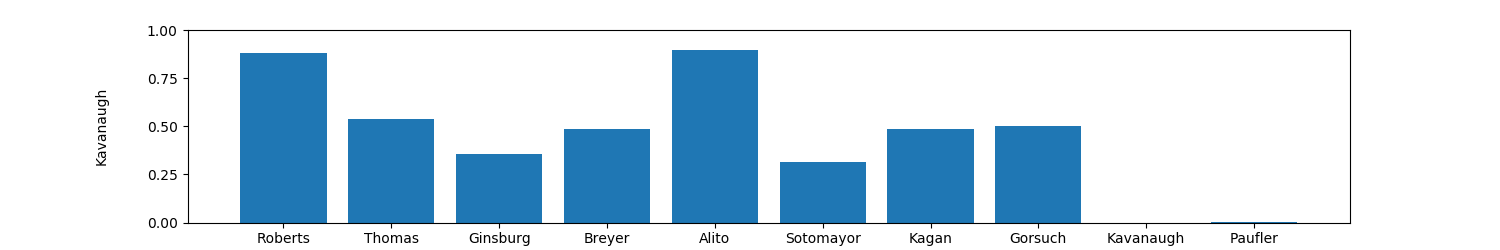

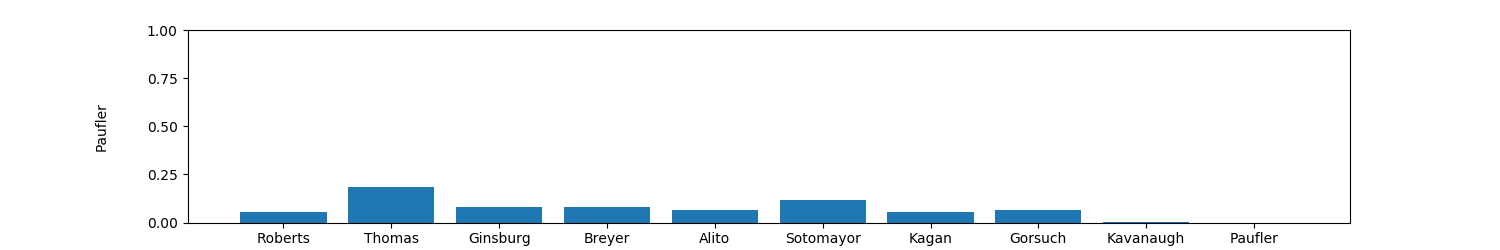

Given an Opinion, how likely is it that one Judge Agreed with another?

- List All Opinions

- For Each Opinion

- +1 If Both Judges Agree with Opinion

- +1 If Both Judges Disagree with Opinion

- Divide by 168 (Number of Total Opinions in All Cases) to Normalize between 0.0 & 1.0.

Each Judge (by definition) agrees with themselves all of the time. These are the only values near 1.0 in the above. So, I zeroed them out. In the graphs that follow, Roberts v Roberts = 0.0.

Further (per the graphs above), the values clump around the middle of the range, so I stretched the data (in the graphs below) by using the following formula.

ratings = [(r - 0.422619047619) /

(0.875 - 0.422619047619)

for r in ratings]

I believe the reason for this clumping is that there are many Supreme Court Cases (say, about 42.26% of them), which are easily decided by Law: i.e. that any Reasonable Jurist would come to the same conclusion. The Cases are straightforward and uncontroversial.

Of course, I disagreed with The Court in regards to many of these uncontroversial decisions. But that's because I don't really care about The Law.

Which is to say, The Judges Decided a Case (I presume; or at least, in theory) on What Is. Whereas, I Decided each case (or at least, some of them) on What Should Be.

Thus, my low level of agreement not only reflects my dissatisfaction with The Court's Rulings; but also, can be taken as a gauge of my relative level of unreasonableness.

The first Obvious Observation (my favourite kind) is that the two Groups of Graphs differ. I got different outputs from the same inputs. I mean, I always sort of knew this was theoretically possible. But this project has really highlighted the inevitable subjectiveness of certain facts to me. I've really just scratched the surface in regards to the possible graphs (as they are without number). But it's pretty clear (to me, anyway) that if I'd had an agenda (other than projecting my greatness out into The Void) and even if I did have an agenda (which, undoubtedly, would involve projecting my greatness out into The Void), I'd be more inclined to see what I could glean from the static (i.e. the preceding graphs) and in that way (wait for it) project my greatness to an otherwise unsuspecting public: i.e. The Void.

Secondly, some of the groupings I'd expected are much easier to see in the second group of graphs than the first.

Ginsburg, Breyer, Sotomayor, and Kagan seem to group.

Whereas Thomas and Alito (including to a lesser extent Roberts and Kavanaugh) do not group as clearly as I would have expected.

Seriously, I very much expected Thomas and Alito to have a higher level of agreement. Though, this last might have more to do with Thomas' habit of writing Outlier Opinions.

Finally, I will note that by this methodology, my opinions correlate with Thomas' better than anyone else.

Any conclusions really are dependent upon how one slices the data.

![Roberts Comparison of Agreement between Roberts and other judges - OrderedCounter(OrderedDict([('Roberts', 0.422619047619), ('Thomas', 0.6309523809523809), ('Ginsburg', 0.6309523809523809), ('Breyer', 0.6666666666666666), ('Alito', 0.8392857142857143), ('Sotomayor', 0.625), ('Kagan', 0.6904761904761905), ('Gorsuch', 0.6488095238095238), ('Kavanaugh', 0.8214285714285714), ('Paufler', 0.44642857142857145)]))](./images/2018_A5_Roberts.png)

![Thomas Comparison of Agreement between Thomas and other judges - OrderedCounter(OrderedDict([('Roberts', 0.6309523809523809), ('Thomas', 0.422619047619), ('Ginsburg', 0.44047619047619047), ('Breyer', 0.42857142857142855), ('Alito', 0.7083333333333334), ('Sotomayor', 0.4226190476190476), ('Kagan', 0.5119047619047619), ('Gorsuch', 0.7440476190476191), ('Kavanaugh', 0.6666666666666666), ('Paufler', 0.5059523809523809)]))](./images/2018_A5_Thomas.png)

![Ginsburg Comparison of Agreement between Ginsburg and other judges - OrderedCounter(OrderedDict([('Roberts', 0.6309523809523809), ('Thomas', 0.44047619047619047), ('Ginsburg', 0.422619047619), ('Breyer', 0.7976190476190477), ('Alito', 0.5416666666666666), ('Sotomayor', 0.875), ('Kagan', 0.8571428571428571), ('Gorsuch', 0.5535714285714286), ('Kavanaugh', 0.5833333333333334), ('Paufler', 0.4583333333333333)]))](./images/2018_A5_Ginsburg.png)

![Breyer Comparison of Agreement between Breyer and other judges - OrderedCounter(OrderedDict([('Roberts', 0.6666666666666666), ('Thomas', 0.42857142857142855), ('Ginsburg', 0.7976190476190477), ('Breyer', 0.422619047619), ('Alito', 0.5892857142857143), ('Sotomayor', 0.8154761904761905), ('Kagan', 0.8452380952380952), ('Gorsuch', 0.49404761904761907), ('Kavanaugh', 0.6428571428571429), ('Paufler', 0.4583333333333333)]))](./images/2018_A5_Breyer.png)

![Alito Comparison of Agreement between Alito and other judges - OrderedCounter(OrderedDict([('Roberts', 0.8392857142857143), ('Thomas', 0.7083333333333334), ('Ginsburg', 0.5416666666666666), ('Breyer', 0.5892857142857143), ('Alito', 0.422619047619), ('Sotomayor', 0.5238095238095238), ('Kagan', 0.6011904761904762), ('Gorsuch', 0.7023809523809523), ('Kavanaugh', 0.8273809523809523), ('Paufler', 0.4523809523809524)]))](./images/2018_A5_Alito.png)

![Sotomayor Comparison of Agreement between Sotomayor and other judges - OrderedCounter(OrderedDict([('Roberts', 0.625), ('Thomas', 0.4226190476190476), ('Ginsburg', 0.875), ('Breyer', 0.8154761904761905), ('Alito', 0.5238095238095238), ('Sotomayor', 0.422619047619), ('Kagan', 0.8511904761904762), ('Gorsuch', 0.5238095238095238), ('Kavanaugh', 0.5654761904761905), ('Paufler', 0.47619047619047616)]))](./images/2018_A5_Sotomayor.png)

![Kagan Comparison of Agreement between Kagan and other judges - OrderedCounter(OrderedDict([('Roberts', 0.6904761904761905), ('Thomas', 0.5119047619047619), ('Ginsburg', 0.8571428571428571), ('Breyer', 0.8452380952380952), ('Alito', 0.6011904761904762), ('Sotomayor', 0.8511904761904762), ('Kagan', 0.422619047619), ('Gorsuch', 0.5892857142857143), ('Kavanaugh', 0.6428571428571429), ('Paufler', 0.44642857142857145)]))](./images/2018_A5_Kagan.png)

![Gorsuch Comparison of Agreement between Gorsuch and other judges - OrderedCounter(OrderedDict([('Roberts', 0.6488095238095238), ('Thomas', 0.7440476190476191), ('Ginsburg', 0.5535714285714286), ('Breyer', 0.49404761904761907), ('Alito', 0.7023809523809523), ('Sotomayor', 0.5238095238095238), ('Kagan', 0.5892857142857143), ('Gorsuch', 0.422619047619), ('Kavanaugh', 0.6488095238095238), ('Paufler', 0.4523809523809524)]))](./images/2018_A5_Gorsuch.png)

![Kavanaugh Comparison of Agreement between Kavanaugh and other judges - OrderedCounter(OrderedDict([('Roberts', 0.8214285714285714), ('Thomas', 0.6666666666666666), ('Ginsburg', 0.5833333333333334), ('Breyer', 0.6428571428571429), ('Alito', 0.8273809523809523), ('Sotomayor', 0.5654761904761905), ('Kagan', 0.6428571428571429), ('Gorsuch', 0.6488095238095238), ('Kavanaugh', 0.422619047619), ('Paufler', 0.4226190476190476)]))](./images/2018_A5_Kavanaugh.png)

![Paufler Comparison of Agreement between Paufler and other judges - OrderedCounter(OrderedDict([('Roberts', 0.44642857142857145), ('Thomas', 0.5059523809523809), ('Ginsburg', 0.4583333333333333), ('Breyer', 0.4583333333333333), ('Alito', 0.4523809523809524), ('Sotomayor', 0.47619047619047616), ('Kagan', 0.44642857142857145), ('Gorsuch', 0.4523809523809524), ('Kavanaugh', 0.4226190476190476), ('Paufler', 0.422619047619)]))](./images/2018_A5_Paufler.png)